Convert TSV format files to JSON line with AWS Lambda function

Recently, I got a change to play with AWS Lambda functions.

We have a batch job that dumps DB tables to .tsv files and uploads them to S3 bucket every day.

My mission is to transform the .tsv file into JSON format, split them into 1M JSON line gzip files and upload to another S3 bucket.

Architecture

I decided to use S3 event notification to invoke Lambda function and trigger Batch job to do the transformation.

The Lambda function will receive the upload event from S3 bucket and then trigger batch job.

The Batch job will use a docker image from ECR written in Python to transform the .tsv file into JSON line and upload to another S3 bucket.

Steps

Create new Lambda function

First of all, you need to create a new Lambda function.

Once you create a new Lambda function, you need to assign a “Execution role” for it.

Execution role

The execution role of Lambda function is actually an IAM role.

The IAM policy depends on your use case, but at least you need AWSBatchFullAccess and AWSLambdaBasicExecutionRole.

Lambda function content

The Lambda function can be written by several programming language. I use Python in my example.

The notification event will trigger lambda_handler function and send related information as function arguments (ex. bucket name, object key…).

I extract S3 bucket name and object path key from S3 notification event and set them as environment variables for Batch job.

import json

import boto3def lambda_handler(event, context):

for record in event[‘Records’]:

bucket_name = record[‘s3’][‘bucket’][‘name’]

object_key = record[‘s3’][‘object’][‘key’]path_key = object_key.split(‘/’)DB_NAME = path_key[0]

DB_TABLE = path_key[1]

DATE = path_key[2]client = boto3.client(‘batch’, ‘us-west-2’)client.submit_job(

jobName = ‘batch-tsv-to-json’,

jobQueue = ‘batch-tsv-to-json’,

jobDefinition = ‘batch-tsv-to-json:1’,

containerOverrides = {

‘environment’: [

{

‘name’ : ‘DB_NAME’,

‘value’ : DB_NAME

},

{

‘name’ : ‘DB_TABLE’,

‘value’ : DB_TABLE

},

{

‘name’ : ‘DATE’,

‘value’ : DATE

}

]

})return 0

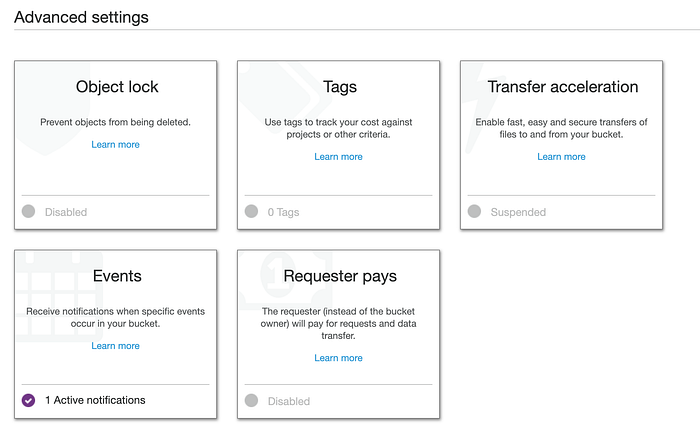

Enable S3 bucket notification

After that, I add Notification Events in S3 bucket properties.

Add one notification event to notify the Lambda function. You can choose how the event will be triggered.

Every time a new object is put on S3 bucket, it will trigger Lambda function.

Don’t forget to check if the S3 notification is enabled in Lambda function.

Push image to ECR

The Batch job needs docker image to create new EC2. It can be from docker image different registries. Here I use AWS ECR service.

Create converter Python docker image

Create a docker image that converts .tsv format files to JSON line.

https://github.com/moonape1226/aws-s3-tsv-to-json/blob/master/app/converter.py

Upload to ECR

Create a new ECR, authenticate your account and push your image.

Authentication AWS account:

aws ecr get-login — region “region” — no-include-emaildocker login -u AWS -p password https://aws_account_id.dkr.ecr.us-east-1.amazonaws.com

Tag and push image:

docker tag e9ae3c220b23 aws_account_id.dkr.ecr.region.amazonaws.com/my-web-appdocker push aws_account_id.dkr.ecr.region.amazonaws.com/my-web-app

Refer to below documents for more information:

- https://docs.aws.amazon.com/en_us/AmazonECR/latest/userguide/Registries.html#registry_auth

- https://docs.aws.amazon.com/en_us/AmazonECR/latest/userguide/docker-push-ecr-image.html

Create Batch job

To use a Batch job, you will need Batch job definition, job queue, compute environment and a ECS launch template.

Below is my Batch job definition settings, remember to set the ECR image path you just uploaded in Batch job definition:

Batch job role settings:

Refer to below documents for more information:

- https://docs.aws.amazon.com/en_us/batch/latest/userguide/jobs.html

- https://docs.aws.amazon.com/en_us/batch/latest/userguide/compute_environments.html

- https://docs.aws.amazon.com/en_us/AWSEC2/latest/UserGuide/ec2-launch-templates.html

Check result

After all steps, you should have Batch job executed automatically every time a new object is upload to source S3 bucket.

The result should be uploaded to destination S3 bucket.

You can also check execution result of Lambda function and Batch job in Cloudwatch.

Some reminder

- The S3 bucket, Batch job, ECR and Lambda function should be in the same AWS account and region

- The default root disk size is 8G. If you need more, you may need to change the disk size in launch template or AMI

- All files are gzip format in the example

Thanks for the reading. Feel free to share your thoughts and comments.