Achieve zero downtime when upgrading Nomad cluster

Recently our team is trying to upgrade our Nomad cluster version. To prevent downtime, we need to shutdown all running jobs gracefully on the node to be upgraded and starting new jobs on the other nodes. Even though Nomad provides drain command to make the whole process easier, there are still lots of things we need to consider about.

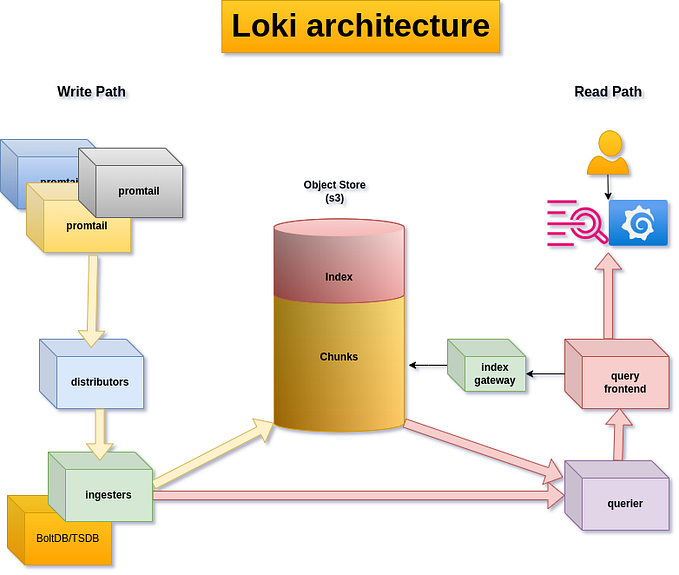

Service Architecture

The picture above is our service architecture. We use AWS ALB to handle traffic from the Internet and do SSL offloading. Then the requests are sent to internal load balancer: Traefik. Once the Nomad job of application is registered onto Consul, Traefik can work with Consul to discover the right IP & port of the container[1][2].

Here, I will walk you through the details of every components we need to take care of one by one:

Docker container

When Nomad drain jobs from worker node, it will use docker stop and send SIGTERM to the container by default[3]. The application has to handle this signal and gracefully shutdown all connections and requests accordingly.

It’s worth noting that when we start application in background in entrypoint.sh, the PID of the application is not 1 and it will not receive the kill signal from Docker. We need to use trap in entrypoint.sh for the application.

For example, if I would like to pass kill signal to Nginx running in background:

nginx -g "daemon off;" &

NGINX_PID="$!"

trap 'kill -SIGQUIT $NGINX_PID; wait $NGINX_PID;' QUIT TERM INTThere is a great article discussing about this problem[4].

Consul & Nomad

Besides handling kill signal in the application, we also need to prevent new requests from being routed into the container. If the application keep process new requests, it won’t be able to terminate itself and eventually it will be forcibly killed by Nomad (with signal SIGINT) after kill_timeout[5].

Nomad provides shutdown_delay for configuring the duration between Consul removes the application from its service registry and Nomad stops the job[6].

It’s better to configure shutdown_delay longer that the shutdown time for the application to prevent requests from being sent into the container.

Traefik

Once the container is remove from Consul service registry, Traefik will not route new traffic into the container.

However, if you are running your Traefik service with Nomad, you also need to take care of kill_timeout and shutdown_delay in the job configuration.

ALB

Now we are running Traefik service with static port 80 on EC2 and we configure the EC2 instances as the target group of ALB.

When Traefik service is deregistered from Consul during draining process, client side will undergo a short period(depends on the health check period of ALB) of HTTP 502 error.

To prevent from this situation, we need to manual deregister EC2 instances from ALB before starting the draining process. The health check interval and deregistration delay are two important parameter for deregister target from ALB[7].

Conclusion

To sum up, in order to achieve zero downtime in a multi-layer container service architecture during node upgrade, we need to handle kill signal, service deregistration and set all related parameter correctly.

However, in our current implementation, we are not able to remove targets from ALB automatically. We will keep work on this architecture to see if we can automate the whole upgrading process.

In the end, this article is inspired by an article discussing about achieving zero down time in Kubenetes and it’s definitely worth a read[8].

Reference

[1] https://admantium.com/blog/ic08_service_discovery/

[2] https://doc.traefik.io/traefik/v1.7/configuration/backends/consulcatalog/

[3] https://learn.hashicorp.com/tutorials/nomad/job-update-handle-signals

[4] https://medium.com/@gchudnov/trapping-signals-in-docker-containers-7a57fdda7d86

[5] https://www.nomadproject.io/docs/job-specification/task#kill_timeout

[6] https://www.nomadproject.io/docs/job-specification/task#shutdown_delay

[7] https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-target-groups.html#deregistration-delay

[8] https://blog.gruntwork.io/zero-downtime-server-updates-for-your-kubernetes-cluster-902009df5b33